Machine Learning Basics

Machine Learning Basics: A Comprehensive Guide to Understanding the Core Concepts, Algorithms, and Tools

Machine Learning (ML) is transforming industries across the globe, revolutionizing how businesses, governments, and organizations approach problems, make predictions, and deliver value. If you’re new to the field or looking to deepen your understanding, this extensive post will serve as a detailed guide to the basics of machine learning. We will explore everything from the core concepts to the essential machine learning algorithms, including regression, classification, clustering, and dimensionality reduction. Additionally, we’ll provide an introduction to scikit-learn, one of the most powerful and user-friendly libraries for implementing machine learning in Python.

❉ Introduction to Machine Learning

Machine Learning is a subset of artificial intelligence (AI) that allows systems to learn from data, identify patterns, and make decisions without being explicitly programmed for each task. In traditional programming, developers write a set of rules for the computer to follow. In contrast, machine learning algorithms automatically adjust their operations based on the patterns and insights they extract from data.

The core idea behind machine learning is to develop models capable of improving their performance with experience, meaning that the more data these models are exposed to, the better they can become at making predictions.

Key Concepts of Machine Learning:

- Data: In ML, data is the cornerstone. It’s what the model learns from. Data can be numbers, text, images, or even time series data.

- Model: A model is a mathematical representation of a system. It’s built from data to make predictions or decisions based on the patterns the system learns.

- Training: This is the process where the model learns from data. During training, the model adjusts its internal parameters to minimize the error between its predictions and the actual outcomes.

- Prediction/Inference: Once the model is trained, it can be used to make predictions on new, unseen data. This is referred to as inference or prediction.

❉ Types of Machine Learning

Machine learning can be divided into three primary types, based on how the learning is done and how the data is structured:

Supervised Learning

Supervised learning involves training a model on a labeled dataset, where the input data (features) is paired with the correct output (labels). The model learns to map inputs to the correct outputs, and its performance is evaluated by comparing its predictions to the actual labels.

- Key Concepts in Supervised Learning:

- Training Data: A dataset containing both inputs (features) and corresponding outputs (labels).

- Test Data: A separate dataset used to evaluate the model’s performance after training.

- Learning Algorithm: The method by which the model learns the relationship between inputs and outputs. Common learning algorithms include Linear Regression, Decision Trees, Support Vector Machines, etc.

- Common Supervised Learning Algorithms:

- Regression: Predicts continuous values. A common example is Linear Regression, where the model fits a line to data points to predict an output based on an input.

- Classification: Predicts categorical labels. For example, Logistic Regression or Decision Trees can be used for tasks like determining if an email is spam or not.

Unsupervised Learning

Unsupervised learning is used when the data does not have labels. The goal is to find hidden structures or patterns in the data. The model tries to identify the relationships within the data without any supervision (i.e., no explicit feedback).

- Key Concepts in Unsupervised Learning:

- Clustering: The process of grouping data points into clusters that are similar to each other. Algorithms like K-Means Clustering and DBSCAN are popular for this.

- Dimensionality Reduction: Reduces the number of features while retaining essential information. Principal Component Analysis (PCA) is one of the most widely used techniques.

- Common Unsupervised Learning Algorithms:

- K-Means Clustering: A simple and effective method for grouping data into k clusters based on feature similarity.

- Hierarchical Clustering: Builds a tree of clusters, useful for cases where the number of clusters is unknown.

- Principal Component Analysis (PCA): Reduces the dimensions of the dataset, highlighting the most significant relationships in the data.

Reinforcement Learning

Reinforcement learning involves training an agent to make decisions by interacting with an environment. The agent receives feedback in the form of rewards or penalties based on the actions it takes. The agent’s goal is to maximize cumulative rewards over time.

- Key Concepts in Reinforcement Learning:

- Agent: The learner or decision maker.

- Environment: The external system the agent interacts with.

- Actions: The choices the agent can make within the environment.

- Rewards/Punishments: The feedback given to the agent after taking an action, indicating how well it performed.

❉ Key Machine Learning Algorithms

In this section, we’ll explore some key machine learning algorithms in both supervised and unsupervised learning.

Regression Algorithms

Regression algorithms are used for predicting continuous numerical values based on input features.

- Linear Regression: One of the simplest algorithms, it assumes a linear relationship between the dependent and independent variables. The model aims to fit a line to the data that minimizes the sum of squared errors.

Linear Regression Equation: [math]y = \beta_0 + \beta_1 x[/math]

Where:- [math]y[/math] is the dependent variable (target).

- [math]x[/math] is the independent variable (features).

- [math]\beta_0[/math] is the intercept.

- [math]\beta_1[/math] is the coefficient (slope).

- Polynomial Regression: An extension of linear regression, polynomial regression is used when the relationship between variables is non-linear. The algorithm fits a polynomial curve to the data to capture non-linear patterns.

Classification Algorithms

Classification algorithms are used when the output variable is categorical.

- Logistic Regression: A popular classification algorithm that uses the logistic function to model the probability of a binary outcome. Despite its name, it is used for binary classification tasks.

- Decision Trees: These algorithms split the data into subsets based on feature values, creating a tree-like model. Decision trees are intuitive and easy to interpret but can suffer from overfitting.

- Random Forest: An ensemble method that builds multiple decision trees and combines their predictions. It reduces overfitting and improves generalization.

- Support Vector Machines (SVM): SVMs aim to find the optimal hyperplane that maximally separates the classes in the feature space. It is highly effective in high-dimensional spaces and is used for both classification and regression tasks.

Clustering Algorithms

Clustering algorithms are used to group similar data points without any prior labeling.

- K-Means Clustering: A popular clustering algorithm that assigns data points to the nearest centroid and iteratively updates the centroids until convergence.

Steps in K-Means Clustering:- Initialize k centroids.

- Assign each point to the nearest centroid.

- Recompute the centroids as the mean of assigned points.

- Repeat steps 2 and 3 until the centroids no longer change.

- Hierarchical Clustering: Builds a hierarchy of clusters. It is particularly useful when the number of clusters is not known in advance.

- DBSCAN: A density-based clustering algorithm that can detect arbitrarily shaped clusters and is robust to noise (outliers).

❉ Popular Machine Learning Algorithms and Their Use Cases

- Linear Regression

- Type: Supervised (Regression)

- Description: Models the relationship between input variables and the output using a straight line.

- Use Case: Predicting house prices based on size and location.

- Logistic Regression

- Type: Supervised (Classification)

- Description: Estimates probabilities and classifies data into binary categories.

- Use Case: Email spam detection (spam or not spam).

- Decision Trees

- Type: Supervised (Classification/Regression)

- Description: Splits data into branches based on feature values.

- Use Case: Loan eligibility prediction.

- K-Means Clustering

- Type: Unsupervised (Clustering)

- Description: Groups data points into predefined clusters based on similarity.

- Use Case: Customer segmentation for targeted marketing.

- Support Vector Machines (SVM)

- Type: Supervised (Classification/Regression)

- Description: Finds the optimal boundary that separates different classes.

- Use Case: Handwritten digit recognition.

- Random Forest

- Type: Supervised (Classification/Regression)

- Description: Builds multiple decision trees and merges their outputs.

- Use Case: Predicting stock market trends.

- Principal Component Analysis (PCA)

- Type: Unsupervised (Dimensionality Reduction)

- Description: Reduces the dimensionality of data while retaining most of its variance.

- Use Case: Reducing features for faster computation in image processing.

- Neural Networks

- Type: Supervised/Unsupervised

- Description: Mimics the human brain by connecting layers of nodes (neurons).

- Use Case: Voice recognition, natural language processing.

- Gradient Boosting Machines (GBM)

- Type: Supervised (Classification/Regression)

- Description: Builds models iteratively to correct the errors of previous models.

- Use Case: Fraud detection.

- Reinforcement Learning (Q-Learning)

- Type: Reinforcement Learning

- Description: Learns actions by maximizing cumulative rewards over time.

- Use Case: Autonomous driving.

❉ Advanced Machine Learning Algorithms

As you grow in expertise, you may encounter more complex algorithms.

- Ensemble Learning

- Combines multiple models to improve performance.

- Bagging (e.g., Random Forest): Reduces variance.

- Boosting (e.g., XGBoost, AdaBoost): Reduces bias.

- Deep Learning Algorithms

- Involves multi-layer neural networks.

- Convolutional Neural Networks (CNNs): Used in image recognition.

- Recurrent Neural Networks (RNNs): Used for sequential data like text and time series.

- Generative Algorithms

- Models that generate new data similar to the training set.

- Generative Adversarial Networks (GANs): Used for image synthesis.

- Variational Autoencoders (VAEs): Used for dimensionality reduction and generative tasks.

❉ How Machine Learning Algorithms Work

- Training Phase:

- The algorithm processes historical data to learn patterns and relationships.

- It adjusts its internal parameters to minimize error or maximize accuracy.

- Testing/Validation Phase:

- The trained model is tested on unseen data to evaluate its performance.

- Prediction/Deployment Phase:

- The model is used to make predictions or decisions on new data.

❉ Evaluation Metrics for Machine Learning Algorithms

Choosing the right metric ensures that the algorithm’s performance aligns with the problem’s objectives.

- For Classification:

- Accuracy: Percentage of correct predictions.

- Precision and Recall: Measure the relevance of predictions (e.g., in spam detection).

- F1 Score: Harmonic mean of precision and recall.

- ROC-AUC: Evaluates model performance across all classification thresholds.

- For Regression:

- Mean Absolute Error (MAE): Measures the average magnitude of errors.

- Mean Squared Error (MSE): Penalizes larger errors more heavily than MAE.

- R² Score (Coefficient of Determination): Indicates the proportion of variance explained by the model.

- For Clustering:

- Silhouette Score: Measures how similar an object is to its cluster compared to other clusters.

- Within Cluster Sum of Squares (WCSS): Determines how tightly grouped the data points are within a cluster.

❉ Choosing the Right Algorithm

The choice of algorithm depends on:

- Type of Problem: Classification, regression, clustering, etc.

- Size of Data: Some algorithms handle large datasets better than others.

- Quality of Data: Clean, well-structured data benefits from simpler models.

- Interpretability: Simple models like linear regression are easier to interpret.

- Computational Resources: Neural networks require more computational power compared to simpler algorithms.

❉ Introduction to scikit-learn

Now that we have a solid understanding of the core machine learning concepts and algorithms, let’s introduce scikit-learn, a powerful and user-friendly library for implementing machine learning models in Python.

What is scikit-learn?

Scikit-learn is one of the most widely used Python libraries for machine learning. It provides simple and efficient tools for data mining and data analysis. It’s built on top of other popular libraries like NumPy and SciPy, making it highly compatible and easy to integrate into data science projects.

Key Features of scikit-learn:

- Algorithms: Scikit-learn includes a wide range of machine learning algorithms for classification, regression, clustering, and more.

- Data Preprocessing: Tools for scaling, transforming, and cleaning data.

- Model Evaluation: Built-in functions to assess model performance using various metrics like accuracy, precision, recall, etc.

- Model Selection: Functions to select the best model using cross-validation and hyperparameter tuning.

Setting Up the Environment

Before we dive into coding, we need to ensure that the environment is ready. If you haven’t installed scikit-learn yet, you can do so by running the following command:

pip install scikit-learn

Additionally, you’ll need libraries such as NumPy, Pandas, and Matplotlib for data manipulation and visualization:

pip install numpy pandas matplotlib

Loading Data in scikit-learn

Machine learning begins with the data, so the first step is to load your dataset. Scikit-learn provides a collection of datasets for practice, such as the Iris dataset (a famous dataset for classification tasks).

Here’s an example of how to load a dataset using scikit-learn:

from sklearn.datasets import load_iris

# Load the Iris dataset

data = load_iris()

# Extract features and target

X = data.data # Feature matrix

y = data.target # Target variable

You can also load your own dataset using Pandas and then convert it into a format that scikit-learn can use:

import pandas as pd

# Load your own dataset

df = pd.read_csv('your_dataset.csv')

# Split data into features (X) and target (y)

X = df.drop('target', axis=1)

y = df['target']

Data Preprocessing

Data preprocessing is a critical step in machine learning. It ensures that the data is in a suitable format for the model. Here are some common preprocessing steps in scikit-learn:

- Handling Missing Values

Many datasets have missing values. Scikit-learn offers tools to handle them, such as using SimpleImputer to replace missing values with the mean, median, or most frequent value.

from sklearn.impute import SimpleImputer # Create an imputer to replace missing values with the mean imputer = SimpleImputer(strategy='mean') # Apply imputation on the features X_imputed = imputer.fit_transform(X)

- Scaling Features

In many algorithms, especially those based on distance (e.g., K-Nearest Neighbors), feature scaling is crucial. Scikit-learn provides StandardScaler to standardize the features by removing the mean and scaling to unit variance.from sklearn.preprocessing import StandardScaler # Standardize the features scaler = StandardScaler() X_scaled = scaler.fit_transform(X)

- Encoding Categorical Variables

For models to work with categorical data (e.g., string labels), you need to encode them into numerical values. Scikit-learn provides LabelEncoder and OneHotEncoder for encoding categorical variables.from sklearn.preprocessing import LabelEncoder # Encoding categorical target variable encoder = LabelEncoder() y_encoded = encoder.fit_transform(y)

Splitting the Data into Training and Test Sets

A key principle in machine learning is to train the model on one part of the data (training set) and evaluate it on another (test set) to prevent overfitting. Scikit-learn makes this easy with the train_test_split function.

from sklearn.model_selection import train_test_split

# Split the data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X_scaled, y_encoded, test_size=0.2, random_state=42)

Training a Machine Learning Model

Once the data is ready, you can train a machine learning model. Scikit-learn provides a wide range of models for different tasks. Let’s walk through an example of training a Logistic Regression model for classification.

from sklearn.linear_model import LogisticRegression

# Initialize the model

model = LogisticRegression()

# Train the model on the training data

model.fit(X_train, y_train)

For other algorithms, the process is similar. Here’s how you can train a K-Nearest Neighbors (KNN) model:

from sklearn.neighbors import KNeighborsClassifier

# Initialize the model

knn_model = KNeighborsClassifier(n_neighbors=3)

# Train the model on the training data

knn_model.fit(X_train, y_train)

Making Predictions

After training the model, you can use it to make predictions on new, unseen data. For example:

# Make predictions on the test set

y_pred = model.predict(X_test)

For regression tasks, you would use the same method, but the predictions would be continuous values rather than categorical labels.

Evaluating the Model

Once you’ve made predictions, it’s essential to evaluate the model’s performance. Scikit-learn provides several metrics for evaluation, such as accuracy, precision, recall, F1-score, and more.

- Classification Metrics

For classification tasks, you can use accuracy_score, confusion_matrix, and classification_report:from sklearn.metrics import accuracy_score, classification_report, confusion_matrix # Calculate accuracy accuracy = accuracy_score(y_test, y_pred) # Print the classification report (includes precision, recall, F1-score) print(classification_report(y_test, y_pred)) # Print the confusion matrix print(confusion_matrix(y_test, y_pred))

- Regression Metrics

For regression tasks, you can use mean_squared_error (MSE), mean_absolute_error (MAE), or R^2 score:from sklearn.metrics import mean_squared_error, r2_score # Calculate MSE and R2 score for regression models mse = mean_squared_error(y_test, y_pred) r2 = r2_score(y_test, y_pred) print(f'Mean Squared Error: {mse}') print(f'R^2 Score: {r2}')

Cross-Validation

Cross-validation is a technique used to assess the model’s performance by splitting the data into several subsets (folds). The model is trained on different combinations of training folds and tested on the remaining fold(s). This technique helps to mitigate overfitting.

from sklearn.model_selection import cross_val_score

# Perform 5-fold cross-validation

cv_scores = cross_val_score(model, X_scaled, y_encoded, cv=5)

# Print the cross-validation scores

print(f'Cross-Validation Scores: {cv_scores}')

Hyperparameter Tuning

Most machine learning algorithms have hyperparameters that can be adjusted to improve performance. Scikit-learn provides GridSearchCV and RandomizedSearchCV to perform hyperparameter tuning.

For example, to tune the hyperparameters of a K-Nearest Neighbors classifier, you can use GridSearchCV:

from sklearn.model_selection import GridSearchCV

# Define the parameter grid

param_grid = {'n_neighbors': [3, 5, 7, 9], 'weights': ['uniform', 'distance']}

# Initialize GridSearchCV

grid_search = GridSearchCV(KNeighborsClassifier(), param_grid, cv=5)

# Fit the grid search to the data

grid_search.fit(X_train, y_train)

# Print the best parameters

print(f'Best Parameters: {grid_search.best_params_}')

❉ Classification Example with Iris Dataset

# Import required libraries

import numpy as np

import pandas as pd

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score, classification_report, confusion_matrix

# Step 1: Load the dataset

iris = load_iris()

X = iris.data # Feature matrix

y = iris.target # Target variable

# Step 2: Explore the dataset

print(f"Feature Names: {iris.feature_names}")

print(f"Target Names: {iris.target_names}")

print(f"First 5 rows of features:\n{X[:5]}")

print(f"First 5 target values:\n{y[:5]}")

# Step 3: Split the dataset into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Step 4: Preprocess the data (feature scaling)

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

# Step 5: Train the model (Logistic Regression)

model = LogisticRegression()

model.fit(X_train_scaled, y_train)

# Step 6: Make predictions

y_pred = model.predict(X_test_scaled)

# Step 7: Evaluate the model

accuracy = accuracy_score(y_test, y_pred)

print(f"\nAccuracy: {accuracy:.2f}")

print("\nClassification Report:")

print(classification_report(y_test, y_pred, target_names=iris.target_names))

print("\nConfusion Matrix:")

print(confusion_matrix(y_test, y_pred))

# Step 8: Make predictions on new data

new_data = np.array([[5.1, 3.5, 1.4, 0.2]]) # Example new flower measurements

new_data_scaled = scaler.transform(new_data)

predicted_class = model.predict(new_data_scaled)

predicted_class_name = iris.target_names[predicted_class[0]]

print(f"\nPrediction for new data {new_data}: {predicted_class_name}")

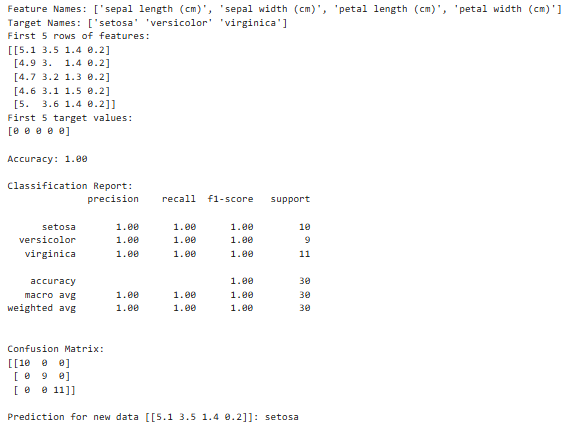

Sample Output

load_iris() function to load the Iris dataset, which is included in scikit-learn.

Features (sepal and petal measurements) and targets (flower species) are extracted and printed.

Data Splitting:

The dataset is split into training and testing sets using train_test_split(). The test size is 20% of the total data.

Data Preprocessing:

The features are scaled using StandardScaler() to ensure all features have similar scales.

Model Training:

A LogisticRegression model is initialized and trained on the scaled training data.

Making Predictions:

The model predicts the species for the test data using predict().

Model Evaluation:

Evaluation metrics such as accuracy, confusion matrix, and classification report are calculated and printed.

Predicting on New Data:

A new flower measurement is passed to the model, and its species is predicted.❉ Challenges and Limitations of Machine Learning Algorithms

- Overfitting: When the model learns the noise in the data instead of the underlying pattern. Regularization and cross-validation can help.

- Underfitting: When the model is too simple to capture the data’s complexity. Using a more complex algorithm or feature engineering can resolve this.

- Bias-Variance Tradeoff: Striking a balance between bias (error due to assumptions) and variance (error due to sensitivity to training data).

- Scalability: Some algorithms struggle with very large datasets (e.g., K-Means on billions of points).

- Interpretability: Algorithms like Neural Networks are often considered “black boxes.”

❉ Conclusion

Machine Learning is a vast and exciting field that is transforming industries and opening new possibilities for innovation. In this post, we’ve covered the fundamentals of machine learning, from basic concepts to key algorithms and practical implementation using scikit-learn. Whether you’re working on classification, regression, or clustering tasks, scikit-learn provides a robust and user-friendly framework for developing machine learning models.

By mastering these fundamental techniques, you can begin solving real-world problems with data, whether through predictive models, customer insights, or automated decision-making. The next step is to explore advanced topics like deep learning, model optimization, and deployment.